// Feed raw audio files directly into the deep neural network without any feature extraction. //

If you have observed, conventional audio and speech analysis systems are typically built using a pipeline structure, where the first step is to extract various low dimensional hand-crafted acoustic features (e.g., MFCC, pitch, RMSE, Chroma, and whatnot).

Although hand-crafted acoustic features are typically well designed, is still not possible to retain all useful information due to the human knowledge bias and the high compression ratio. And of course, the feature engineering you will have to perform will depend on the type of audio problem that you are working on.

But, how about learning directly from raw waveforms (i.e., raw audio files are directly fed into the deep neural network)?

In this post, let's take learnings from this paper and try to apply it to the following Kaggle dataset.

Go ahead and download the Heatbeat Sounds dataset. Here is how one of the sample audio files from the dataset sounds like

The downloaded dataset will have a label either "normal", "unlabelled", or one of the various categories of abnormal heartbeats.

Our objective here is to solve the heartbeat classification problem by directly feeding raw audio files to a deep neural network without doing any hand-crafted feature extraction.

Prepare Data

Let's prepare the data to make it easily accessible to the model.

extract_class_id(): Audio file names have its label in it, so let's separate all the files based on its name and give it a class id. For this experiment let's consider "unlabelled" as a separate class. So as shown above, in total, we'll have 5 classes.

convert_data(): We'll normalize the raw audio data and also make all audio files of equal length by cutting them into 10s if the file is shorter than 10s, pad it with zeros. For each audio file, finally put the class id, sampling rate, and audio data together and dump it into a .pkl file and while doing this make sure to have a proper division of train and test dataset.

Create and compile the model

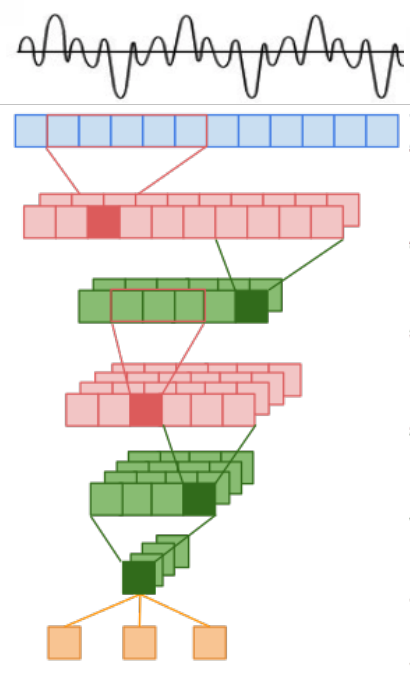

As written in the research paper, this architecture takes input time-series waveforms, represented as a long 1D vector, instead of hand-tuned features or specially designed spectrograms.

There are many models with different complexities explained in the paper. For our experiment, we will use the m5 model.

m5 has 4 convolutional layers followed by Batch Normalization and Pooling. a callback keras.callback is also assigned to the model to reduce the learning rate if the accuracy does not increase over 10 epochs.

Start training and see the results

Let's start training our model and see how it performs on the heartbeat sound dataset.

As per the above code, the model will be trained over 400 epochs, however, the loss gradient flattened out at 42 epochs for me, and these were the results. How did yours do?

Epoch 42/400 128/832 [===>..........................] - ETA: 14s - loss: 0.0995 - acc: 0.9766 256/832 [========>.....................] - ETA: 11s - loss: 0.0915 - acc: 0.9844 384/832 [============>.................] - ETA: 9s - loss: 0.0896 - acc: 0.9844 512/832 [=================>............] - ETA: 6s - loss: 0.0911 - acc: 0.9824 640/832 [======================>.......] - ETA: 4s - loss: 0.0899 - acc: 0.9844 768/832 [==========================>...] - ETA: 1s - loss: 0.0910 - acc: 0.9844 832/832 [==============================] - 18s 22ms/step - loss: 0.0908 - acc: 0.9844 - val_loss: 0.3131 - val_acc: 0.9200

Congratulations! You’ve saved a lot of time and effort extracting features from audio files. Moreover, by directly feeding the raw audio files the model is doing pretty well.

With this, we learned how to feed raw audio files to a deep neural network. Now you can take this knowledge and apply to the audio problem that you want to solve. You just need to collect audio data normalize it and feed it to your model.

The above code is available at following GitHub repository

That's it for this post, my name is Vivek Amilkanthwar. See you soon with one of such next time; until then, Happy Learning :)

References:

1) https://arxiv.org/pdf/1610.00087.pdf

2) https://github.com/philipperemy/very-deep-convnets-raw-waveforms

3) https://openreview.net/pdf?id=S1Ow_e-Rb