Hi! This is Chinnapa, from Goalist. Today I decided to write an English post, because we clearly have fewer of those than we need :).

When improving a neural net (or any machine learning algorithm) one question that inevitably comes up, is

Will the algorithm do better if I give it more data?

The thing is, very often gathering more data involves time, effort, and money. So it's probably a good idea to think carefully about it first. Accurately diagnosing whether you need more data, and what kind of data you should go about collecting, can save a lot of headache.

Under-fitted models

One thing to consider is whether your algorithm is under-fitting your data. Under-fitting is when the algorithm is :

- (a) not doing as well as you'd like (low accuracy or low f-score)

- (b) It is performing badly on both the training set, as well as the validation set, and the gap between these scores is relatively small.

In this case, gathering more of the same data is unlikely to improve your results significantly, if at all. However, increasing the input features may help. So, for example, if you're trying to predict the height of a person based on their age - you may want to collect other data for the data labels - such as weight. As opposed to getting more data of age -> height.

If, however, your data does already allow for a reasonable prediction, then the problem probably resides in the model, and not in the quantity of data. You can test this by considering if a human (equipped with the expertise) would be able to make predictions based on your input data.

For example, if your model is attempting to label a picture, and you have an under-fitting issue, increasing the size of the picture (more input features) is unlikely to help. The model should be able to label the picture with the data it already has. As discussed above, increasing the number of pictures is also unlikely to improve an underfitting issue, and your efforts would likely be best spend in trying different neural network models (more filters in a conv net, more layers etc.)

Over-fitted models

The opposite case is when your model is over-fitting your data. Overfitting is when your model does well on your training set, but does significantly worse on the validation set.

Here too, before deciding to gather more data, it would be wise to perform an error analysis first. Actually looking at each mistake that a model makes can help significantly. There may be some type of skew or bias in your training set Maybe you didn't shuffle the training and validation data? Maybe there are certain noise / signals that your model is picking up that are non-representative or don't make sense?

https://github.com/marcotcr/lime

Is one tool you can use to help you analyze why your model is making the decisions it is.

You also have two amazing tools that can emulate the effects of gathering more data:

Regularization

Regularization is a method that increases the loss of your model based on factors that are not related to accuracy. One example is using regularization to cause the magnitude of the weight matrices to contribute to loss. It prevents your model from trying to account for the peculiarities of anomalies that may (do) exist in your data.

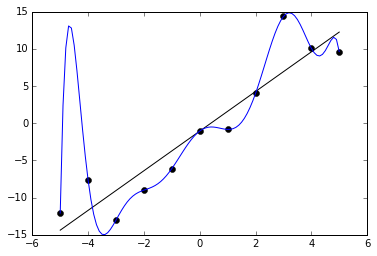

Essentially it stops this:

Dropout

Dropout layers are a simple an extremely effective tool that randomly ignore features in a layer of the neural net (during training only). This results in the model being unable to fixate on single features, and force it to use all possible information in the data to make predictions. Note that you'll have to increase the number of epochs the model trains for when you use dropout.

Here's what a simple net might look like in python/keras using the above features.

input_features = Input(shape=(num_features,)) processing = Dense(1024, activation='relu', kernel_regularizer=l2(0.01))(input_features) processing = Dropout(0.5)(processing) processing = Dense(1024, activation='relu', kernel_regularizer=l2(0.01))(processing) processing = Dropout(0.5)(processing) output = Dense(category_count, activation='softmax')(processing) model = Model(inputs=input_features, outputs=output)

The kernal_regularizer parameter takes a regularization function that works to keep the weights on the layer from getting too large. l2() is one of the default regularizers that comes with keras, and it's parameter, 0.01 is the lambda multiplier (increasing it will increase regularization). Dropout(0.5) is a dropout layer that has a 50% chance of ignoring a specific feature. Dropout layers are only used during training, so they won't change hamper your model in production.

In summary

When evaluating your model and deciding how best to improve it, diagnosing what problem you're trying to solve under-fitting or over-fitting is the first step. This will guide you on whether you need to gather more of the same type of data, new features, whether data isn't relevant to your problem at all.

Next, in the case of over-fitting, experiment with regularization and dropout.

Finally, once you are convinced that your model is actually overfitting, and neither regularization or dropout has improved it sufficiently, then it is worth setting down the arduous road of gathering more data.

Best of luck!